Eval

Agents eval:

- Start early.

- Source realistic tasks from failures.

- Define unambiguous, robust success criteria.

- Design graders thoughtfully and combine multiple types (code-based, model-based, human).

- Make sure the problems are hard enough for model.

- Iterate on evaluations to improve signal-to-noise ratio.

- Read transcripts (记录).

- Pick framework: prompt foo, harbor.

| Method | 👍 Strengths | 👎 Weaknesses |

|---|---|---|

| Human Evaluation | Captures nuanced behavior | Subjective, time-consuming, expensive, difficult to scale |

| LLM-as-a-Judge | Consistent, scalable, efficient | May overlook intermediate steps, limited by LLM capabilities |

| Automated Metrics | Objective, scalable, efficient | May not capture full capabilities |

Trace

When building agents, trace is the source of truth:

- Debugging becomes trace analysis

- Testing becomes eval-driven

- Can't set breakpoints in reasoning

- Performance optimization changes: task success rate, reasoning quality, tool usage efficiency

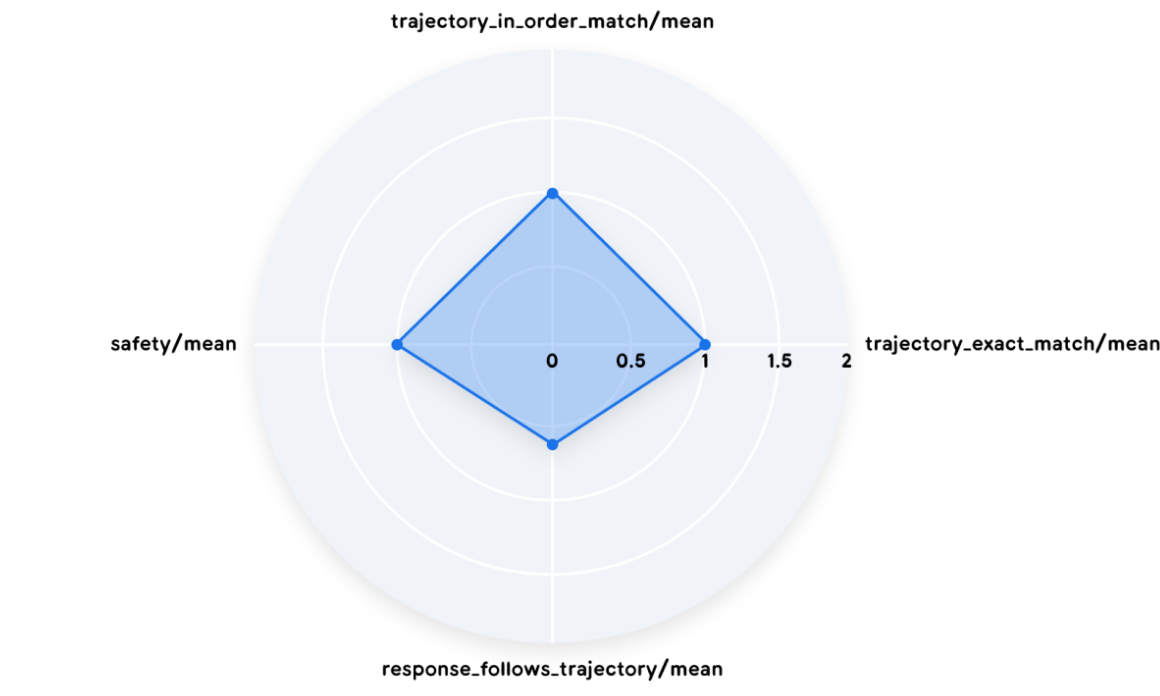

Trajectory

Trajectory is equally important as final response:

- Exact match: produce trajectory that perfectly mirrors ideal solution.

- In-order match: complete expected trajectory, while accommodating extra, un-penalized actions.

- Any-order match: include all necessary actions.

- Precision: relevant tool calls.

- Recall: essential tool calls.

Benchmarks

- Aggregate: Don’t obsess over a 1-2% lead on one benchmark, focus on specific and comprehensive domain.

- Relative: Compare within the same model family or lab, how did the score change from v1 to v2?

- Verify: The only benchmark that matters at the end of the day is your workload.